NAS Adventures Switching to a tiered storage solution

NAS Adventures Switching to a tiered storage solution

I believed everyone who plans on storing files for the long-term convinces themselves they'll remember where and how everything is stored, at least retrospectively. Like a new garage, or a storage cellar, we tell ourselves - "not this time. I'm going to make sure everything stays neat and tidy."

Instead, what inevitably happens is that we start loading our crud into it. Smaller things that are harder to quantify value end up scattered across the storage space like lost trinkets, making finding certain things more like memory guessing game than an easy chore.

Me, like I image many others, ended up with this same situation. But instead of it being solely isolated to the physical confines of my storage closet, it bleed into my digital life as well. This became a huge problem for me when trying to figure out how to effectively do backups. Important files weren't in one area, they were scatted across multiple areas across several different storage areas on my NAS.

Important family photos? Make sure you include the folder nested along-side some disposable DVD rips or you might lose a year's worth of your daughter's childhood memories. That's the brutal reality of what could happen if backups take place without understanding where things are, but also why.

If you don't want the fluff, you can skip to "The three tiered NAS backup solution" near the end of the article for what I did allow for better backups

How to backup the chaos

When I started making regular backups for my family photos, documents, etcetera; I did what any normal sysadmin would probably do and built a recurring script on my server. It took a list of directories, archived them using a backup solution (which in my case is borg) and then wrapped it within a systemd service and timer, which ran nightly.

I'd give a code example, but the point I'm making is that this method was actually horrible and a nightmare to maintain. And no, I'm not talking about having to occasionally spend a few moments updating the script so the next backup picked up the other little bits that might have changed.

I'm talking about deduplication and how I was fighting it for no reason. Sure, having a static list of file paths that could change at any moment, and cause the archiving job to possible fail is problematic. But moving files around in a deduplicating backup can have even more problematic consequences.

Deduplicated backups are smarter than linear/statically loaded backups. In the case of borg, it will keep a flattened dictionary of file nodes and track their file signatures (along with other important metadata like the date and name information). When a file gets renamed, modified, or plopped into a different place in the filesystem structure - borg can use an array of checks to see if it's actually a new file, or just a permutation of an existing one.

This saves a LOT of bandwidth when you're dealing with backups that only have incremental differences. Instead of having to compare the entirety of the two disks and ensure parity during a sync-based or linear backup, it just uses that dictionary to check for differences.

Cool right? So what's the problem, actually. Is there even a problem this sounds like it might be in my head?

Deduplicated backups have a quietly documented disadvantage, and that's the cost of processing. When a directory structure isn't stable, or files are being plopped from one place on a file structure tree to another, or worse - sometimes were being moved AND having their metadata updated through external software: borg, or really any good deduplicating backup system still needs to check the file signatures and normal checks, but depending on the severity, this can make a speedy backup become even slower as it has to decrypt the exiting bundles, compare other metadata, choose if the modification is worth an update - and then finally rectify this all with a process called rechunking.

All of my backups use encryption, but not all of my backups are done through directly attached storage. This becomes a real issue when dealing with synchronizing data with low-powered hardware, such as a Raspberry Pi 3, which shares the network and usb (disk) bandwidth as if it were the same. Even as a SSHD client, the amount of effort to push and pull object signatures over a connection like that is miserable - and this was happening frequently as the structures in my nas were changing, files being moved around by certain docker images... Things were getting out of hand, very fast.

Tiered storage

I needed to clean house. This deduplication process was supposed to be saving my bandwidth, but not costing extra time. Thankfully, the problems I encountered were indicators of things that were needed for a favorable backup situation.

-

I needed stable file structures. No more moving around files trying to come up with a better structure. Moving file structures had unforeseen consequences.

-

I needed to stop sparsely spreading files of a similar importance across different areas.

-

If I was running software that updated file metadata, it should make sure files will always be in a similar or close-enough location - or at least preserved the naming/folder structure.

Now normally, if you asked a tech-savy person what they thought above the above, more likely than not: the answer you would get would be some form of "just practice, get good at naming things properly and make it a habit." If you spend time researching this topic, like I did, you will probably be faced with the same conclusion.

I was not satisfied with this. There had to be a better way...

An oversimplified overview of classical storage tiers in servers

In high performance storage solutions, there are typically ~two~ three types of storage solutions:

-

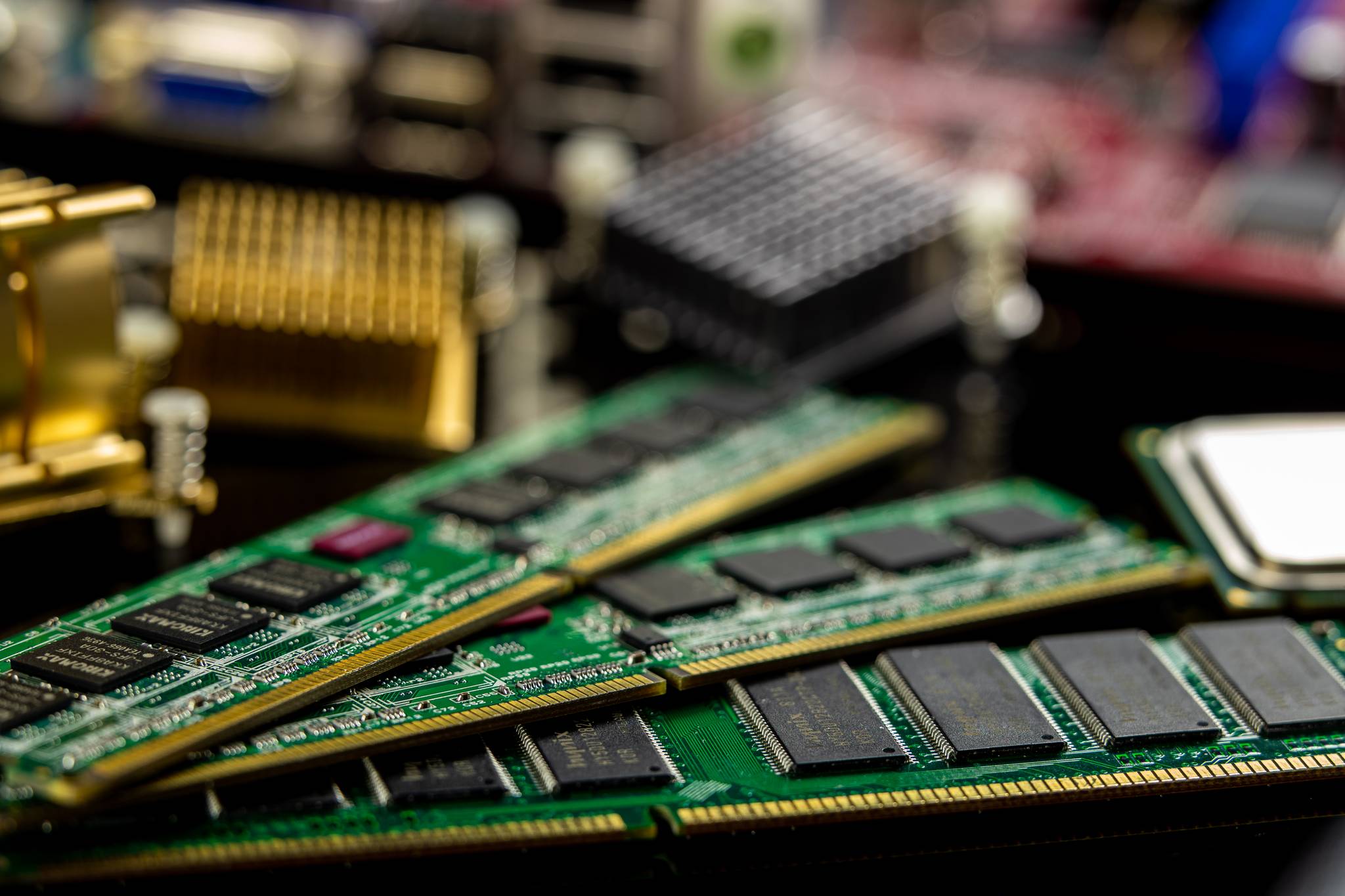

First there is blazing fast volatile storage solutions, which are essentially some glorified iteration of what people used to simply call "RAM Disk." There are so many different names for this now, but one of the ways this gets applied today is through caching. Not only do I not want this, the performance metric this fulfills for for saturating networks that are pushing through several gigabytes worth of data every second - something I have no need for.

-

The second tier, is solid state storage (SSD). Persistent, mostly drop resistant, and as long as the controllers they use receive power at least a dozen times a year, can keep data for the same duration. They don't last forever, as each bit acts like a battery, and some SSDs are much more sensitive than others (i.e cramming multiple bits into combined cells, such TCL). Without arrays, SSDs can saturate most home and small-business networks with ease. With arrays, it's possible for SSDs to provide enough saturation to push across enough gigabytes to almost rival RAM Disks. One major drawback of SSDs is that they don't have as much writable life span as a disk drive, but they make up for this in pretty much every other way.

Another rarely talked about issue with some SSDs is their vulnerability to sonic rays - highly charged photons that have enough energy to flip an SSD cell from 0 to 1 on a whim. Find yourself unlucky enough to own a cheap or older SSD without any form of active error correction - and well...

-

And finally, there's

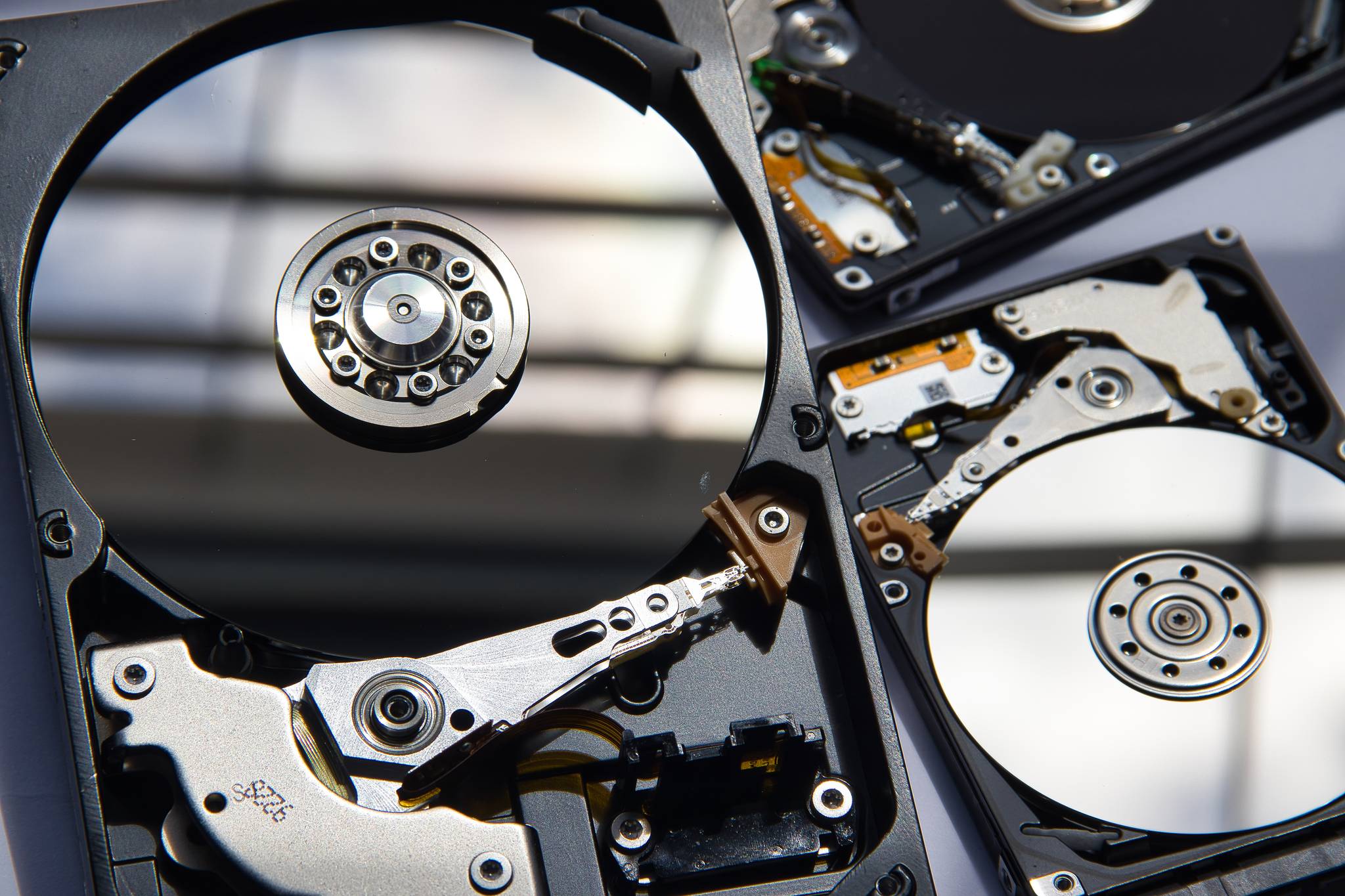

garbage, I mean hard disk storage. I don't like these. Some people really like disk drives as they are a much more economic alternative to SSD, as platters are less costly to manufacture - cheaper materials and less science involved than building NANDs and controllers. They are always slower for accesses and writes than a SSD, especially for random reads and writes (which can be a problem when file structures are moving around - even unintentionally).I can't stress enough how much I loathe disk drives. There's an immense amount of liability keeping drives from failing, insomuch that anyone that uses them seriously is always forced to use a redundant array of them in the inevitable one of more of them choose to fail. Have an array of them? Make sure they don't resonate too well or they will lower their lifespan. Also, don't leave your music on for too long or shout at them. Also, don't leave them unattended as they might leak their "high performance gases" causing the drive to write slower and/or start chomping bad sectors into your platter...

Sure they work. Expensive drives even work great. But this is the last tier for a reason, and that's because it's not something I want to invest in for redundancy, or have to deal with for things I need.

Taking the above and applying it to backups

After looking at the above, I basically derived the following conclusions:

-

I don't have a lot of bandwidth to saturate, so I don't need RAID or volatile storage or cache

-

I don't like volatile storage, so RAM Disk makes no sense for my needs. If I were serving my NAS to millions of users, sure - but for my needs I do not need it.

-

I would never trust a hard-drive as a long-term primary storage medium. As such, I would never be willing to invest into a large array of them.

-

I constantly pump data into my NAS, meaning the data is usually at rest. This means the writable life limitations of SSD aren't really problematic and lower the likelihood of a normal lifecycle failure.

And from there, my tiered backup solution was born:

The three tiered NAS backup solution

On a 2.5 gigabit network, the maximum transfer speed is 312 megabytes per second. A normal SATA-3 SSD (which is what my NAS uses) can theoretically max out at roughly 600+ megabytes per second, which means storage speed is not the bottleneck there.

Knowing this, in terms of hardware I broke my NAS into two primary tiers:

-

fast- an LVM group/volume of only SSDs that can be amended with additional storage as I need. If I ever do decide to use RAID, LVM supports raid as a storage configuration. Files on the SSD are highly important or frequently modified. -

slow- an LVM group/volume of only disks. Slow and chungus, it holds at-rest data that will be read much more frequently than it will be written. This data is not backed up as the medium itself is disposable

And then, I broke these mediums into their own tiers:

-

fast/t1- Highest importance and the most frequently accessed (family photo sync, secured documents). Backed up every night, and limited to strict file structures. Photos are grouped in folder by their month and year, and are not allowed to be renamed. Important documents are securely backed up using this method. -

fast/t2- Medium importance, files that are likely to be needed but not essential. Code and project archives, long form (non-secured) documents, receipts, manuals, and some service caches. This tier is only backed up every week. -

slow/t3- Disposable tier. Local copies of cloud-based content (i.e ebook collections, long form media formats). Anything that I would never lose sleep over if it vanished overnight, but also don't need to access frequently or concurrently.

With this structure, my backups have not only become much easier to maintain, but the logical file structure has also helped me track the priority and persistence of certain digital content. My highly important documents and photos are on a much more robust medium, and my disposable files are on a much more disposable medium.

Bonus - LTO tapes

There's a way to get the cost benefit of hard-drives, without all of the mechanical liabilities. In the future I plan on experimenting with enterprise mechanical tapes for long-term at rest backups. Instead of relying of an always-on server sync, I give people I trust some LTO tapes and check on them once in a while. There will be a post made once this happens.